Garbage In, Garbage Out

Watch Out for The 53% "Study"

Checkmate, critics of so-called “protected” bicycle lanes!

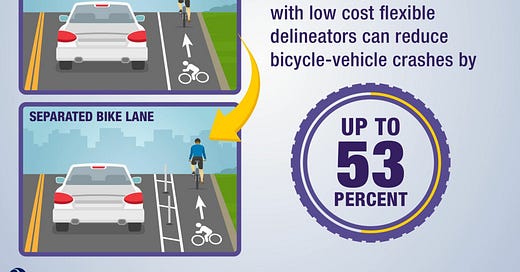

The High Priests at the US Federal Highway Administration (FWHA) have been promoting the following claim.

The full 122 page study is here.

It’s likely, if it hasn’t already, becoming a quick talking point among supporters of so-called “protected,” or separated bike lanes (SBLS) in a similar manor as the infamous bullshit Marshall and Feranchak “study” that claimed SBLs make the roads safer for all users.

“Safe for all road users”

“This paper provides an evidence-based approach to building safer cities,” begins the conclusion of the study, “Why cities with high bicycling rates are safer for all road users” a study conducted by academics Wesley Marshall and Nicolas Feranchak. Published in 2019 in the

Keri Caffery, co-founder of the Cycling Savvy educational program last year pointed out the FHWA “study” and its provocative “statistic” in her article How to Ruin a Buffered Bike Lane.

Of course the devil is often in the details and it utterly less appealing than sexy-sounding platitudes.

She included a discussion chock full of technical criticisms including the researchers leaving out the very location - intersections - where the vast majority of car-bike collisions occur.

She also wrote of the FWHA soundbite social media promotion of their study:1

The FHWA social-media post and linked documentation read and look like a commercial advertisement. An “up to” claim is an advertising trick to mention only a single most favorable result without incurring legal jeopardy. Such claims are common for weight-loss programs, car ads promoting gas mileage or EV range, and the like. From a government agency purporting to show the results of research that affects public safety, it’s… troubling.

Now Paul Schimek has jumped in with a critique he shared with Judy Frankel, a regular participant on the Facebook group Encinitas: Please Restore Safe Cycling in Cardiff.

Schimek , via Frankel, posted the following, minor formatting notes for legibility added here.

Why There is No Useful Data on the Safety of SBLs in Developing Crash Modification Factors for Separated Bicycle Lanes (FHWA-HRT-23-078)

Key Problems

They wanted to collect bicycle count data but the pandemic interfered with their data collection, so instead they synthesized (made up) bicycle counts based on sparse bicycle count data and counts of total traffic (mostly not bicycles). The method differed in each of the three study cities: "the bicycle exposure variable, known as the AADB, was estimated based on a variety of bicycle count types that included short-term bicycle counts, periodic counts that occurred regularly (usually every 2 yr), and a few permanent bicycle count stations." (p. 103)

The study is cross-sectional, which is a very weak study design, since there can be many factors that affect crash rates in different locations. They argue that longitudinal studies are not good, but mostly because it is hard to collect the data (e.g., when bike lanes were added or modified, and bike and crash counts before and after). (see p. 73). In fact, before and after studies are much more methodologically sound, if done well.

They only compared SBLs to "traditional" bike lanes, not to streets without bike lanes.

They did not include intersections in their models. "For each city, the data were separated in two subsets: segments and intersections. The following sections document analyses and results, first by the different city datasets developed for this research and then as a combined dataset. The research team did not successfully develop CMFs for intersections or corridors with SBLs." p. 78 Also p. 88: "This study included an analysis that focused on roadway segments. The research team assessed the prospect of using intersection-only and corridor-type models but found that for the available dataset, these two options did not yield statistically viable results."

Specific "Results"

San Francisco

Model includes 384 bike lane segments, meaning between intersections, and excluding segments with no bike lanes.

Both original and expanded model including many explanatory variables found no statistically significant effect of bike lanes on crashes (Tables 44 & 46), but somehow they claim there is a statistically significant difference between the types of bike lanes (e.g. Table 47).

Cambridge

Their model includes 179 bike lane segments, meaning between intersections, and excluding segments with no bike lanes. Their model including many explanatory variables found no statistically significant effect of bike lanes on crashes (Table 49), nor were they able to find a statistically significant difference between the types of bike lanes (Table 50).

So instead they combined the Cambridge and San Francisco data, even though the crash data and bicycle exposure data methods were different, and still they found no statistically significant relationship (Table 51), but as with San Francisco alone, they inexplicably found differences among the different types of SBLs (but the results are not explained and are not part of model).

Seattle

Their model includes 660 bike lane segments, meaning between intersections, and excluding segments with no bike lanes. Their model including many explanatory variables found no statistically significant effect of bike lanes on crashes (Table 55). As with Cambridge, they were not able to find a statistically significant difference between the types of bike lanes (Table 56), using an unexplained method.

Combined Model

Instead of concluding that there was no relationship between bike lanes and crashes in segments, as they had already found for intersections, they decided to combine all three cities, despite the large differences in the way they synthesized bicycle counts (and also likely differences in crash reporting). In addition, "the team decided to remove all segments with either AADB or ADT having values of zero" p. 92. This change produced the finding, unlike in all of the other models, that BLs with "Flexi-post vertical elements" reduced crashes. They produce a table with unclear origins (Table 60) that claims that Flexible Posts reduce crashes compared to either traditional bike lanes or buffered bike lanes, but sites with "blended vertical elements" did not show that reduction unless they combined them with the Flexible Posts sites.

They do not provide confidence intervals for their estimated CMFs, but they seem to be very large. For example, their coefficient estimate for Flexible Posts vs Traditional BLs is −0.698 with a standard error of 0.264. At the traditional 95% level of confidence, the estimate ranges from -1.215 to -0.181. This means that instead of an estimated 50% reduction in crashes, the reduction could be anywhere from 30% to 83% -- except that the flaws in the methodology listed above mean that we have no assurance that even this range represents the real reduction in crashes between intersections when adding flex posts to a bike lane, particularly since the results for each city separately showed no statistical significance at all.

Furthermore, Steve Linke posted a reply to Frankel’s post with his own critiques:

I independently analyzed that same study and came up with a similar list of limitations/critiques.

The study cited by the FHWA for the 53% crash reduction modeled data from Cambridge, MA, San Francisco, CA, and Seattle, WA, and then attempted to validate their safety model on data from Austin, TX and Denver, CO. But here are some examples of the inconclusive nature of the alleged safety findings:

1. The study does not make clear how the crash numbers were collected. As we have observed, it is far less likely that solo bike crashes are captured in crash data after SBLs are installed, so the study may overestimate safety improvements by underreporting post-SBL crashes.

Quote from the study: ""Possibly, other bicycle crashes may have occurred and were not reported. The research team was unable to incorporate these unreported crashes into the scope of this study."

2. Related to #1, the "small print" in the FHWA marketing clarifies that the 53% reduction applies only to vehicle/bicycle crashes, as opposed to also including solo bike crashes, which tend to be the vast majority of crashes and can be very serious or fatal.

3. The FHWA marketing says "up to" 53%, which means they cherry-picked the best result within the study.

4. Bike volume data were largely unavailable for the study, so many assumptions and modeling were used to estimate volumes, which may not be accurate and can bias the result toward a desired outcome.

5. SBLs are typically constructed on street "corridors," which are comprised of "intersections" plus the street "segments" between the intersections. The safety findings could only be modeled on the individual segments between intersections (mid-block crashes). The safety modeling failed when the full corridors were studied, suggesting that crashes at intersections counter-acted any mid-block safety benefits of SBLs.

Quote from the study: "Initially, the research team hoped to develop [safety estimates] for segments and intersections, but their attempts to model intersections and/or entire corridors were unsuccessful. Consequently, the team focused on developing robust [safety estimates] for segments [only]."

6. This study found that flex posts alone improved safety, but that when other vertical elements were added in addition to flex posts, such as wheel stops/berms, the amount of safety improvement decreased. Other studies have claimed that flex posts alone do not improve safety. So, the conclusions are a bit of a mess.

7. While the safety model produced from the Cambridge, San Francisco, and Seattle data was allegedly validated in the Austin data, it failed in the Denver data, raising serious questions about the reproducibility of the results.

Unfortunately, this is all quite complex and time-consuming to explain, and it is easy for others to cite simple marketing claims from seemingly authoritative sources like the FHWA.